ViDRILO: The Visual and Depth Robot Indoor Localization with Objects information dataset

Tools

The ViDRILO dataset is released in conjunction with a MATLAB toobox that provides with the following capabilities:

- Generation and evaluation of multimodal semantic localization systems using sequences of the dataset for training/test

- Descriptors generation from perspective images

- GIST

- Pyramid Histogram of Oriented Gradients (PHOG)

- Greyscale Histogram

- Descriptor generation from point clouds

- Ensemble of Shape Function (ESF)

- Depth Histogram

- Learning and classification stages

- Support Vector Machines

- k-Nearest Neighbor (kNN)

- Random Forest

- Evaluation of the results: generation of graphics

- Confusion matrix for room classification

- Precision/recall for object recognition

- ROC curves for object recognition

- Evaluation of the results: metrics

- Accuracy

- Root Mean Squared Error (RMS)

- True Positives and False Rates

- Precision

- Recall

- F1-Score

- Area Under the Curve (AUC)

- Precision at recall levels for: 0.25, 0.50, 0.75 (object recognition)

- Generation of dataset statistics

- Room distribution

- Objects distribution

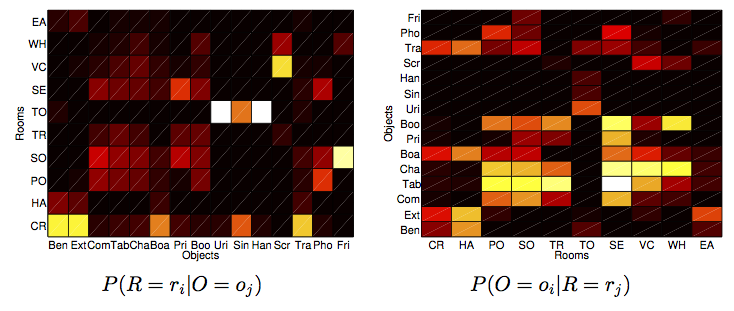

- Objects and Rooms relationships (P(Room=r|Object=o) and P(Object=o|Room=r) )

- Point Cloud File visual representation (without any additional requirements like PCL library, openCV, etc) for dataset point cloud files.

- Visualization for dataset frames

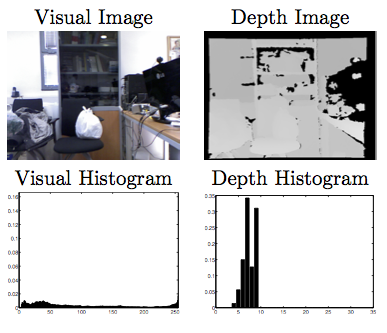

- Visual and depth images

- Grayscale and Depth histograms

The toolbox includes a complete guide of use with detailed information for its installation and use.

Toolbox Files

The ViDRILO toolbox has the following main files:

- ConfigurationVidrilo.mat: Configuration file with the whole dataset annotations and paths to the sequences.

- visualizePointCloud.m: RGB-D (visual image and point cloud file) visualization

- showDatasetOverallStats.m: Dataset stats visualization.

- evaluateExternalResults.m: Evaluates the room classification and object recognition results over a specific dataset sequence and stored in a external .csv file.

- runVidriloClassifier.m: Room classification and object recognition for a combination of.

- Training and test sequence.

- Source of information: visual, depth or both combined

- Type of classification model: SVM, kNN or RFs

- Type of visual descriptor: GIST, PHOG or Greyscale Histogram

- Type of depth descriptor: ESF or Depth Histogram

Thanks to the visualizePointCloud function, it is possible to generate MATLAB figures with the visual representation of the point cloud files. Two different types of visualizations are provided. The first type shows colour and depth information for a single frame and visualize some features extracted from them. Concretely, it shows the histogram extracted from each image.

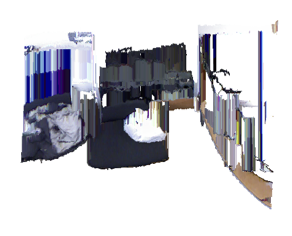

The second type loads a point cloud file into a manipulable Matlab surface figure (see next figure). This figure allows to change the viewpoint in the scene. Despite the existence of more powerful alternatives (as the PCL viewer), the released pad visualizer does not require the installation of any additional software.

The showDatasetOverallStats function loads the configuration files and generates two graphs with the following information: the probability of finding a room once we have recognized an object and vice versa.

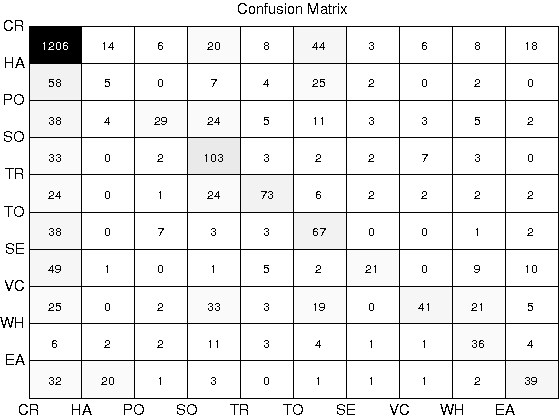

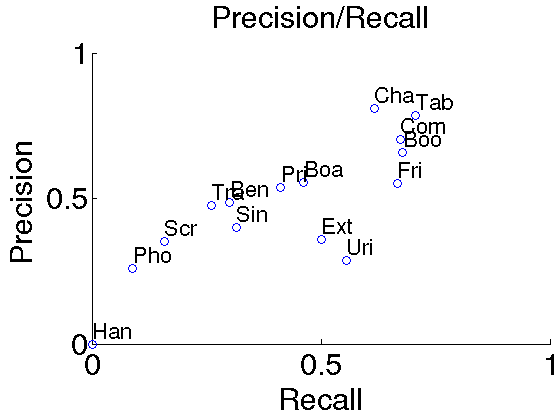

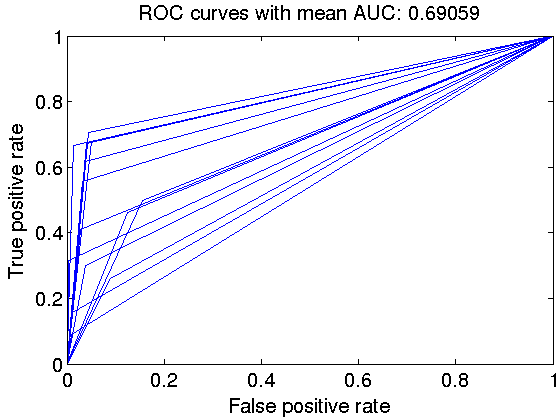

The runVidriloClassifier function performs the basic steps in both visual place classification and object recognition problems: descriptor generation, learning stage, classification, and evaluation of the results. As descriptor generation, we include five global descriptors (GIST, PHOG, ESF, Greyscale Histogram and Depth Histogram). The generated descriptors are then used as input for a classification model. We included three different classifiers: SVMs. kNN and RFs. Regarding rooms classification, we train a single multi-class classifier, while for each object we train a single binary classifier.The evaluation of the results computes different statistics for room classification and object recognition. Namely, it is generated a room confusion matrix for room decisions. With respect to object recognition, the toolbox generates a precision/recall graph and figure with the ROC curves for all the objects. Other metrics are also computed: accuracy, RMS error, precision, recall, AUC, and precision at recall levels.

As an example of the use of the Toolbox, the following three graphs below are obtained when calling runVidriloClassifier(2,1,'visual','knn','gist'). This involves the generation of a kNN classifier from the GIST features extracted from Sequence 2 and its evaluation against Sequence1.

With regards to the metrics obtained, the toolbox will generate the following lines:

## Only Visual Information

## Classification Model: kNN

## Visual Descriptor: GIST

### ROOMS CLASSIFICATION - DETAILED RESULTS BY ROOM ###

| Room | TP Rate | FP Rate | Precision | Recall | F1-Score | ROC Area |

| CR | 0.90473 | 0.28693 | 0.79920 | 0.90473 | 0.84870 | 0.80890 |

| HA | 0.04854 | 0.01794 | 0.10870 | 0.04854 | 0.06711 | 0.51530 |

| PO | 0.23387 | 0.00927 | 0.58000 | 0.23387 | 0.33333 | 0.61230 |

| SO | 0.66452 | 0.05640 | 0.44978 | 0.66452 | 0.53646 | 0.80406 |

| TR | 0.53676 | 0.01509 | 0.68224 | 0.53676 | 0.60082 | 0.76084 |

| TO | 0.55372 | 0.05026 | 0.37017 | 0.55372 | 0.44371 | 0.75173 |

| SE | 0.21429 | 0.00611 | 0.60000 | 0.21429 | 0.31579 | 0.60409 |

| VC | 0.27517 | 0.00893 | 0.67213 | 0.27517 | 0.39048 | 0.63312 |

| WH | 0.51429 | 0.02285 | 0.40449 | 0.51429 | 0.45283 | 0.74572 |

| EA | 0.39000 | 0.01879 | 0.47561 | 0.39000 | 0.42857 | 0.68561 |

| W.Avg: | 0.67811 | 0.17068 | 0.66579 | 0.67811 | 0.65374 | 0.75371 |

### ROOMS CLASSIFICATION - OVERALL RESULTS ###

### ROOMS: WELL CLASSIFIED: 1620.

### ROOMS: BAD CLASSIFIED: 769.

### Accuracy: 67.81.

### Root Mean Squared Error: 0.25373.

### OBJECT RECOGNITION - DETAILED RESULTS BY OBJECT ###

| Object | TP Rate | FP Rate | Precision | Recall | F1-Score | ROC Area |

| Ben | 0.29921 | 0.03747 | 0.48718 | 0.29921 | 0.37073 | 0.63087 |

| Ext | 0.50000 | 0.15513 | 0.35772 | 0.50000 | 0.41706 | 0.67243 |

| Com | 0.67340 | 0.04015 | 0.70423 | 0.67340 | 0.68847 | 0.81662 |

| Tab | 0.70615 | 0.04359 | 0.78481 | 0.70615 | 0.74341 | 0.83128 |

| Cha | 0.61741 | 0.03799 | 0.80902 | 0.61741 | 0.70034 | 0.78971 |

| Boa | 0.46269 | 0.12486 | 0.55578 | 0.46269 | 0.50498 | 0.66891 |

| Pri | 0.41143 | 0.02800 | 0.53731 | 0.41143 | 0.46602 | 0.69171 |

| Boo | 0.67687 | 0.04964 | 0.65677 | 0.67687 | 0.66667 | 0.81361 |

| Uri | 0.55556 | 0.03169 | 0.28846 | 0.55556 | 0.37975 | 0.76193 |

| Sin | 0.31579 | 0.00380 | 0.40000 | 0.31579 | 0.35294 | 0.65600 |

| Han | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.50000 |

| Scr | 0.15854 | 0.01040 | 0.35135 | 0.15854 | 0.21849 | 0.57407 |

| Tra | 0.26011 | 0.08901 | 0.47742 | 0.26011 | 0.33675 | 0.58555 |

| Pho | 0.08889 | 0.01000 | 0.25806 | 0.08889 | 0.13223 | 0.53944 |

| Fri | 0.66667 | 0.01329 | 0.55072 | 0.66667 | 0.60317 | 0.82669 |

| W.Avg: | 0.49219 | 0.06978 | 0.58309 | 0.49219 | 0.52470 | 0.71121 |

### OBJECT RECOGNITION - OVERALL RESULTS ###

### OBJECTS: TOTAL NUMBER OF OBJECTS WELL DETECTED: 1860 ###

### OBJECTS: TOTAL NUMBER OF OBJECTS BAD DETECTED: 1349 ###

### OBJECTS: TOTAL NUMBER OF OBJECTS NOT DETECTED: 1919 ###

### Root Mean Squared Error: 0.30199.

### Average Precision: 0.58.

### Average Recall: 0.49.

### Average F1 score: 0.53.

### Average Area Under Curve (ROC): 0.69.

### Average Precision at 0.25 Recall Level: 0.20.

### Average Precision at 0.50 Recall Level: 0.38.

### Average Precision at 0.75 Recall Level: 0.48.